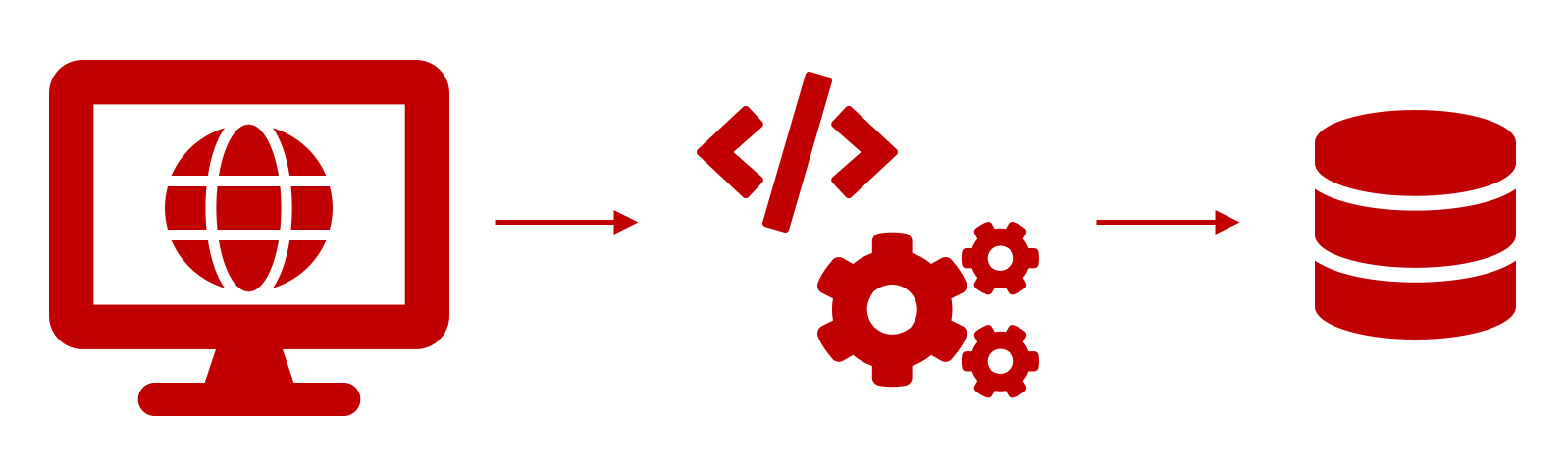

Today I'll show you how to automate repetitive internet searches. First, we build our own web scraper, which pulls the desired information from the web for us. Then we create a Cronjob that automatically runs our program for us at the desired times.

All of our time is precious and most of us try to use the 24 hours we have per day as efficiently as possible so that, in addition to completing all of our tasks, we also have a little time for the good things in (working) life.

How annoying are repetitive tasks then? Not only do they cost us a lot of time, they are also usually quite undemanding... No, I'm not talking about the daily walk to the rubbish bin 😉 Unfortunately, I don't have a digital solution up my sleeve for that yet. But pay attention to how many activities you do on your computer in exactly the same way over and over again. I bet everyone can think of something right away.

OK, but what can we do about wasting our valuable time on these tasks?

The solution to this is "automation".

Automation is not just a buzzword from the high-tech industry, but a way for all of us to let our computers take care of unpleasant, repetitive tasks.

Today I want to show you how something like this can work, using an example from my working life. As a freelancer, I always keep my eyes open for new projects where I can contribute my expertise (= euphemism for "I also have to make money" 😉 ). I do this, for example, with web portals where I enter certain keywords, click through the results pages and, while browsing, match the individual entries/tenders/offers with my expertise.

Boooooring and repetitive!!! So today we are going to write a program that solves this task and use so-called web scraping, i.e. download data from the web with this program.

As an example, I have chosen the German federal government's e-tendering platform, as this involves some difficulties that we will solve in this tutorial in order to hopefully make life easier for you in similar projects (obviously, the keywords are in German on this platform, sorry!).

Step 1: Inspect page

First of all, let's look at the robots.txt file of the page. All you have to do is append 'robots.txt' to the base URL of the page (i.e. https://www. evergabe-online.de/robots.txt). This page contains information about which URLs SHOULD not be retrieved by web crawlers (more information about the Robots Exclusion Standard can be found here). In our example, the 'search' URL is not affected and we can continue without a guilty conscience.

Now let's look at the individual elements of the page. For example, there is a user input "Suchbegriff" (="Search term"), a drop-down menu for the time of publication and a "Suchen" (="Search") button on the page. To obtain the html information on these elements, which we subsequently need for our program, all we need to do is right-click on the element and click on "Inspect Element" (Safari) or "Inspect" (Chrome).

If we enter a search term (our program should search for the terms "Umwelt" (="environment") and "Natur" (="nature")) on the page, we get a results table. We also have to inspect this. It has several columns and rows and, most importantly, it has several pages that we have to navigate to somehow. If we look at the stored html links, we see that the IDs of the numbered pages unfortunately do not follow any logic that I can understand. BUT there is a 'next' button (the ">" symbol) with which we can circumvent this problem by simply letting our program press this button repeatedly until we reach the last page.

Step 2: Writing the web scaper

Before we start programming, we quickly create a "virtual environment" and install all the libraries we will need later (i.e. BeautifulSoup, Pandas, Selenium, Math, Time, Re, Datetime). I do this for all my projects so that I can ensure that years from now my projects will still work exactly as I once planned them and won't be shot to pieces by updates to Python or libraries. I use the manager Conda for this (instructions for installation and operation here).

But now we really get down to programming. First we create a new script in Python with the name "webscraper.py" and import everything we need.

Afterwards we write the first of two functions. I have deliberately kept this very general (the parameters "Stichwort" (="keyword") and "Zeitraum" (="period") can be set) in order to enable the reuse of this function in other programs.

As you can see, the function is relatively complex, so we cannot go into every detail here. Nevertheless, I will try to give you an overview of the functionality:

First, we define which driver/browser we are working with, pull up the desired URL and enter the desired parameters in the search mask. Then we press the "Suchen" (="Search") button (lines 1 to 13). You may wonder about the waiting times I have built in. Normally, our program would simply work through each step and could be finished in a few moments. But the page we are working with must have enough time to load. That's what the waiting times are for.

Then we take the total number of results of the page and use it to calculate how many pages the results table has (lines 16 to 19). This is important later, because we have to tell the program somehow how often to press the "Next" button.

Now we write empty lists for all elements of the results table that we want to capture (lines 20 to 26).

And now we get down to the nitty-gritty: We write ourselves a for loop embedded in a while loop (lines 28 to 52). The while loop runs through all the pages of the table, picks up their content and splits it into the individual rows of the table. Then the for loop runs through each line of the partial table. The text content of the individual cells is extracted and appended to the corresponding list.

Afterwards, an if-query clarifies whether there is another results page and, if so, the "Next" button is clicked. In this case, the while loop goes through another round.

Once all results pages have been picked up, we create a dataframe (a data set with a table structure; lines 58 to 61) from the filled lists, close the driver/browser (line 62) and return the dataframe (line 63).

Phew, we've got the hardest part behind us 🙂

Now let's write a second, much less complex function:

In this we now run our first function twice, once with the search term "Umwelt" and once with "Natur" (lines 2 to 3).

Then we merge the data frames obtained and delete any duplicates (lines 5 to 6).

We now filter the combined data set for further keywords by converting the description of the projects into a string and checking whether certain substrings are included (in my case, of course, these all have something to do with data analysis; lines 8 to 12).

Now we only need today's date and, if the data set is not empty (= no hits), we can save it as a csv file with today's date in the file name (lines 14 to 18).

Now just a tiny code snippet and we're done. This makes the program multifunctional: we can import the program as a module and, for example, use only the first function in other programs. But we can also let the program run on its own with this addition; then the second line of this snippet is executed, the program runs through completely and saves the projects that have been added to the platform with our search terms in the last seven days.

Step 4: Automation

Done!!! 🙂

Or? Not quite. Now we still face the problem that we would have to run this program manually every week. Repetitive task and all, you remember...

But no problem. Computers today come with on-board tools that allow us to automate such tasks. On Unix-based systems, such as the Mac, this is Cron, for example. With this tool, we now want to ensure that our program is automatically executed every Monday at 12:00 noon.

To do this, we go to the console and enter the following:

crontab -eYour standard text editor opens. For me it is vi, where we now enter 'i' to get into insert mode. Then we enter the following:

0 12 * * 1 /Users/Jochen/opt/anaconda3/envs/Webscraper/bin/python /Users/Jochen/Desktop/Analysen_Skripts/Webscraper/webscraper.pyLooks strange, right?

The first cryptic part tells our cronjob that we want the job to run at minute 0 of the twelfth hour on every Monday (an extremely helpful page to familiarise yourself with the cron syntax can be found here).

In the second part we refer to our 'virtual environment' and in the third to our program. Note that your paths will be different from mine!

Then we close the input with "Esc" and type ":wq". This closes the editor and, if everything was entered correctly, you get the message "crontab: installing new crontab".

Now we have actually done it!!!

We have written a web scraper that searches a website for hits with our search parameters and saves them in an easy-to-read format. This program is now run automatically once a week. So we don't have to do any more work and have gained additional time for meaningful activities 🙂

Have fun with web scraping and automating!

And of course, as always: If you have any questions, comments or ideas about this post, feel free to send me an email.